图片上传

简单的图片上传

前端

1

2

| <input type="file" id="file">

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| function upload() {

var formData = new FormData();

var fileField = document.querySelector("#file");

const file = fileField.files[0];

formData.append('inputFile', file);

fetch('/upload', {

method: 'POST',

body: formData,

})

.then(res => res.json())

.then(res => {

document.querySelector("#url").value = res.url

});

}

|

服务端使用express的一个插件

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

| const upload = require('multer')();

const router = require('express').Router();

router.post('/upload', upload.fields([{ name: 'inputFile' }]), async (req, res) => {

const file = req.files['inputFile'][0];

const md5Name = crypto

.createHash('md5')

.update(file.buffer, 'utf-8')

.digest('hex');

const extname = path.extname(file.originalname)

const Key = '/upload/' + md5Name + extnam

try {

const { url } = await oss.upload({

Key: Key,

Body: file.buffer

});

res.send({

code: 200,

data: file.originalname,

url

})

} catch (error) {

res.send({ code: 500, msg: error })

}

});

|

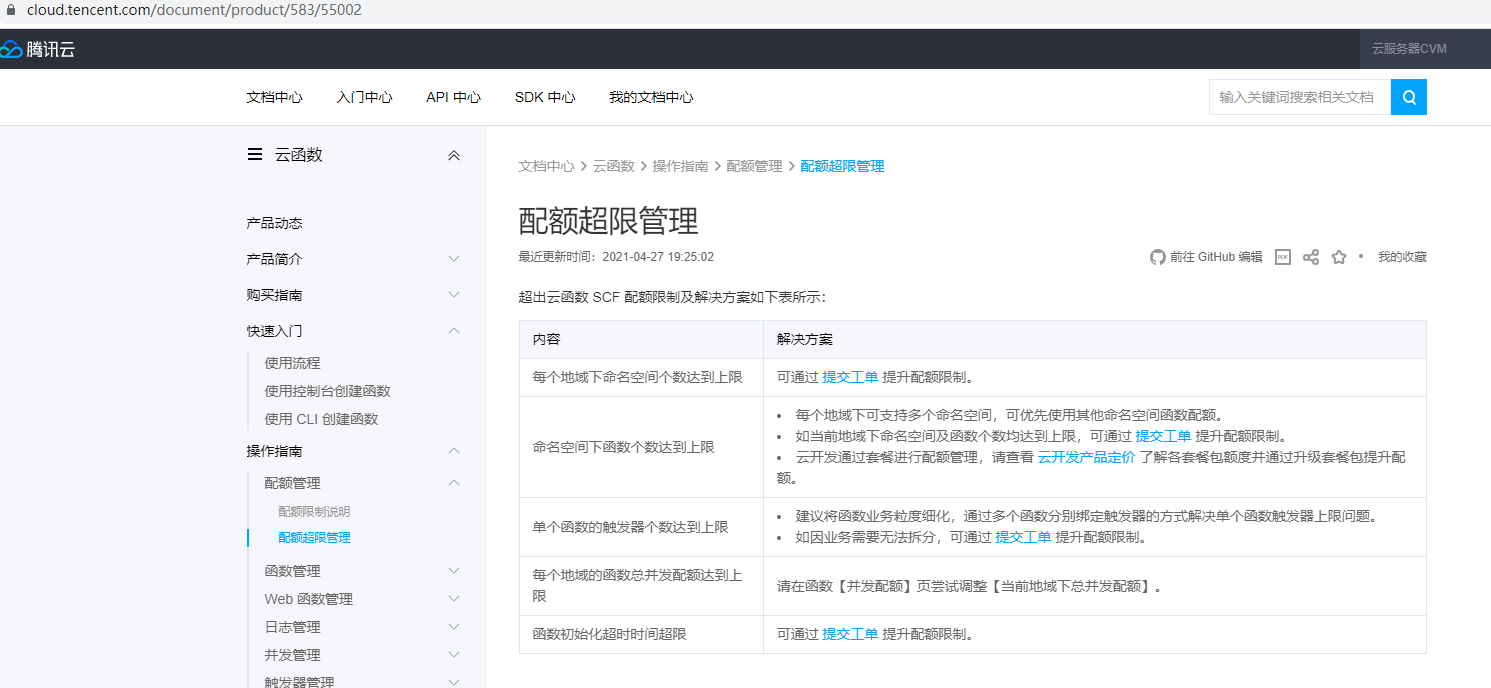

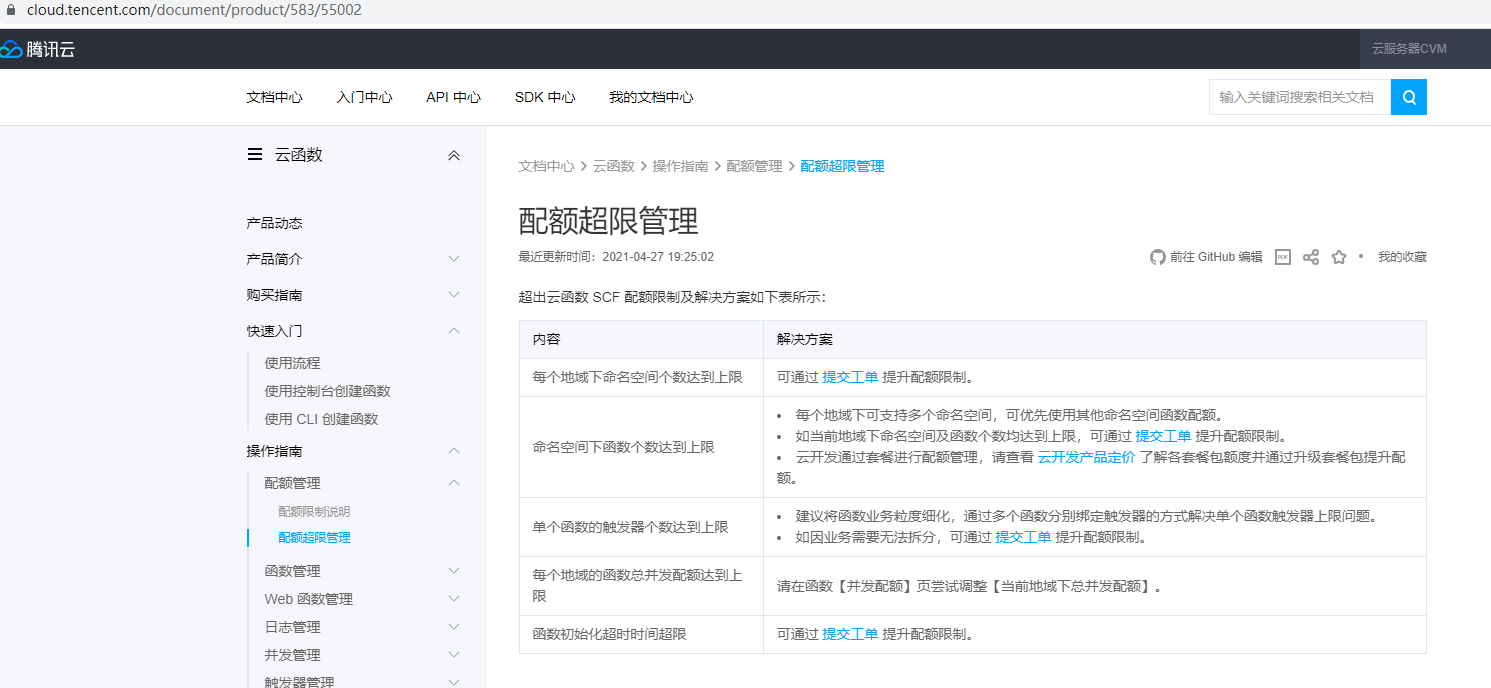

最后部署到腾讯云 serveless上,没啥问题直到上传的文件超过6M

查阅文档,提交工单,得到的说法都是网关会拦超过6M的请求体

没办法,分片上传

分片上传

思路

- 前端切片,按顺序打上标签,然后分别上传到后端,后端存储到临时文件夹

- 前端发送上传完成的请求,后端将切片的文件合并

- 删除临时文件

前端

html没有区别

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

| const bytesPerPiece = 4 * 1024 * 1024;

function upload() {

var formData = new FormData();

var fileField = document.querySelector("#file");

const file = fileField.files[0];

const { size } = file;

if (size > bytesPerPiece) {

chunkUpload(file)

} else {

formData.append('inputFile', file);

fetch('/upload', {

method: 'POST',

body: formData,

})

.then(res => res.json())

.then(res => {

document.querySelector("#url").value = res.url

});

}

}

async function chunkUpload(file) {

const { size, name, type } = file;

let index = 0;

const totalPieces = Math.ceil(size / bytesPerPiece);

console.time('上传完成')

while (index < totalPieces) {

const start = index * bytesPerPiece;

let end = start + bytesPerPiece;

if (end > size) {

end = size

}

const chunk = file.slice(start, end);

const sliceIndex = `${name}.${index}`;

const formData = new FormData();

formData.append("chunk", chunk)

formData.append("fileName", name)

formData.append("chunkName", sliceIndex);

console.log(formData)

await fetch('/uploadChunk', {

method: 'POST',

body: formData,

});

index++

}

console.timeEnd('上传完成')

console.log('上传完成')

fetch('/joinChunk', {

method: 'post',

headers: {

'Content-Type': "application/json;charset=UTF-8"

},

body: JSON.stringify({ 'fileName': name })

})

.then(res => res.json())

.then(res => {

document.querySelector("#url").value = res.url

})

}

|

后端

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

| router.post('/uploadChunk', upload.fields([{ name: 'chunk' }]), async (req, res) => {

const file = req.files['chunk'][0];

const buffer = file.buffer;

const { chunkName, fileName } = req.body;

const chunkPath = join(__dirname, `../temp/chunk/${fileName}`, chunkName)

const filePath = join(__dirname, `../temp/chunk/${fileName}`)

if (!fs.existsSync(filePath)) {

fs.mkdirSync(filePath);

}

fs.writeFileSync(chunkPath, buffer);

res.send({

code: 200,

data: filePath,

msg: `${chunkName}上传成功`

})

});

router.post('/joinChunk', async (req, res) => {

const { fileName } = req.body;

console.log(req.body)

const dir = join(__dirname, `../temp/chunk/${fileName}`)

const file = join(__dirname, `../temp/file/${fileName}`)

const files = fs.readdirSync(dir);

files

.sort((a, b) => {

const aNum = Number(a.split('.').pop())

const bNum = Number(b.split('.').pop());

return aNum > bNum ? 1 : -1

});

files.forEach(chunkName => {

const chunkUrl = join(dir, chunkName)

const buffer = fs.readFileSync(chunkUrl);

fs.appendFileSync(file, buffer)

fs.unlinkSync(chunkUrl)

});

fs.rmdirSync(dir);

const fullFile = fs.readFileSync(file)

const md5Name = crypto.createHash('md5').update(fullFile, 'utf-8').digest('hex');

const extname = path.extname(file);

const Key = '/upload/' + md5Name + extname

console.log(Key)

try {

const { url } = await oss.upload({

Key: Key,

Body: fullFile

});

res.send({

code: 200,

data: fileName,

url

})

} catch (error) {

res.send({ code: 500, msg: error })

} finally {

fs.unlinkSync(file)

}

})

|

本地测试毫无问题,上传到serveless,结果您猜怎么着?

反复研究了文档,烦了n多次客服之后,确定网关的6MB大小小智是base64之后要小于这个值

二进制传输的Binary和content-length不是,base64之后的大小菜市

得出以下几种解决方案

将碎片文件直接存到cos里面,再读取cos里面的碎片合并到cos

优点:cos和scf在同一区域,传输流量免费

缺点:碎片受到网关请求体大小限制

利用sfc的临时文件目录可以读写,将碎片合并到临时目录,上传到cos

有点:只传输一次到cos

缺点:碎片受到网关请求体大小限制

前端直接用cdk传到cos

优点:不走网关,没有大小限制

缺点:md5、鉴权都要放到前端

综合考虑,准备放弃走网关了,直接前端上传

api服务就是应该提供一些鉴权,功能